BMVA2008-Talk

Contents |

[edit] Machine vision for macro, micro and nano robot environments

Bala Amavasai, Jan Wedekind, Manuel Boissenin, Fabio Caparrelli & Arul Selvan

[edit] Introduction

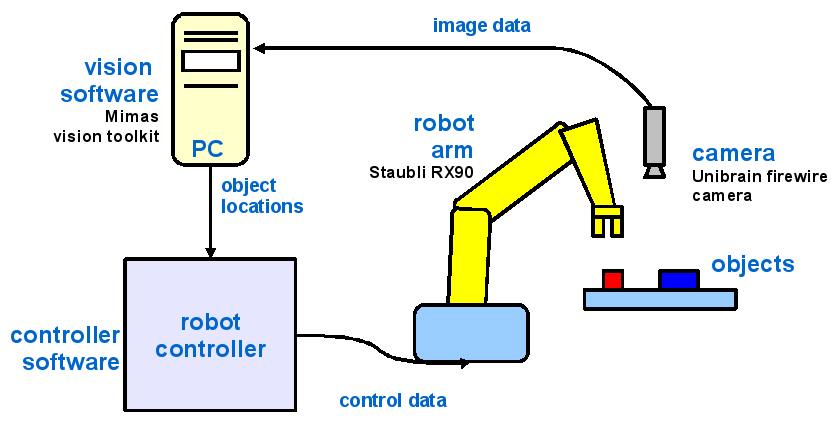

In providing feedback to a robot system, essential sensor information is necessary to facilitate closed loop control. A camera system is now fairly common and is often used as one of the key sensors. The processing pipeline include the capturing of images from hardware, pre-processing, feature extraction or segmentation, representation or description, recognition and interpretation. Vision researchers in general will specialise in one or more of these fields, but the combination of these constitute a complete vision system.

In developing autonomous robot systems or manipulation systems, there is a great need for robust and deterministic algorithms. Often simple algorithms that are able to perform consistently and with real-time constraints is preferred.

A number of issues need to be addressed in order to increase the robustness of the vision system. The first is the operating environment. If the operating environment of the robot system can be controlled then this will substantially ease the task. This is often not possible. The second is the type of objects that are being handled. These objects need to be modelled and stored in a way that is easy to be recalled and matched. The third is the presense of noise. Here, noise may constitute either unwanted textures that may distort the contour of the object or clutter in the scenes. Thus in the presense of noise the need for pre-processing is increased.

This paper presents assembly and manipulation tasks related to to 4 projects and that fall within the domain of macro, micro and nano manipulation. The common factor between these four projects is the use of vision for feedback.

[edit] Vision systems for manipulation

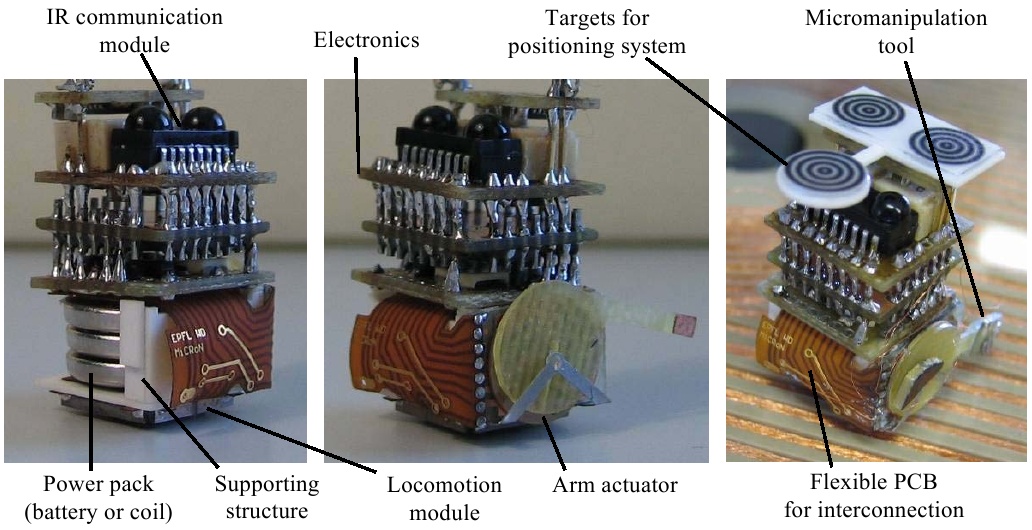

In the sub-macro domain, we shall demonstrate the manipulation of objects for the EU FP5 MiCRoN project. Here objects are manipulated through 4 degrees-of-freedom, so a variation of the Bounded Hough Transform is used to store shape information through the various orientations. The robots are approximately 1 cm3 in size and are designed to operate wirelessly and cooperatively. Piezo actuators are used for locomotion and for moving the single manipulator arm. One of the problems encountered by the vision system is the limited depth-of-field, so textures on the object may either be in focus or out of focus. The representation used in the vision system accounts for this, and hence the model of the objects being manipulated is represented by a focus stack.

In the micro domain, we shall look at the EU FP4 Miniman project. The Miniman robots are essentially decimetre sized robots that are designed to operate under an optical microscope or scanning electron microscope. A complete vision system has been developed to manipulate a variety of objects, from the sub-macro to the micro domain. Here issues that need to be addressed include the locomotion of the main platform as well as manipulator guidance. Two tasks will be demonstrated, and the algorithms used shall be discussed. The first task involves the assembly of a micro-lens system. The second involves teleoperated control for cell manipulation.

In the nano domain, we shall look at vision systems that operate within a transmission electron microscope (TEM). In particular we shall address the vision tasks that need to be carried out in guiding a 5-DOF nanoindenter for conducting experiments in the TEM.

Videos and further information about the techniques presented are available at the MMVL Wiki site: http://vision.eng.shu.ac.uk/mmvlwiki/index.php/BMVA2008-talk

|

Demonstration of Lucas-Kanade tracker applied to high magnification video and low magnification video. The indenter is lost where it moves to fast for the tracking algorithm |

The software can be configured for a variety of environments

|

A vision-guided Stäubli robot performing pick-and-place operations (15.8 MByte WMV video) |

[edit] Acknowledgements

This research is partially supported by the Nanorobotics EPSRC 'Basic Technology' grant no. GR/S85696/01.