Depth from Focus

m (→3D surface metrology) |

m |

||

| (35 intermediate revisions by one user not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=Depth from Focus= | =Depth from Focus= | ||

==3D surface metrology== | ==3D surface metrology== | ||

| − | * | + | * You need to grab the focus stack with your microscope and digital camera. |

* The results will be even better, if the illumination optics of the microscope can project a pattern. | * The results will be even better, if the illumination optics of the microscope can project a pattern. | ||

* Non-destructive measurement of surface profiles | * Non-destructive measurement of surface profiles | ||

* With our experimental settings we observed (of course the result will depend on the quality of the microscope) | * With our experimental settings we observed (of course the result will depend on the quality of the microscope) | ||

| − | ** Vertical resolution | + | ** Vertical resolution <math>\ge 0.2\ \mu m</math> (depending on aperture-size, magnification, projection-pattern and the surface properties of the object). |

| − | ** Lateral resolution | + | ** Lateral resolution <math>\ge 2\ \mu m</math> (depends). |

* '''Open Source''' (you are free to improve the code yourself if you redistribute it). | * '''Open Source''' (you are free to improve the code yourself if you redistribute it). | ||

In general one can say: ''The lower the depth of field, the higher the resolution of the reconstruction.'' | In general one can say: ''The lower the depth of field, the higher the resolution of the reconstruction.'' | ||

| − | With high magnification (assuming constant numerical aperture) the resolution of the reconstruction goes up. The trade-off is that the reconstruction will cover a smaller area. This | + | With high magnification (assuming constant numerical aperture) the resolution of the reconstruction goes up. The trade-off is that the reconstruction will cover a smaller area. This can be overcome by lateral stitching (e.g. cogwheel below). |

==Demonstration== | ==Demonstration== | ||

| − | Here are some typical microscope images ( | + | Here are some typical microscope images (Surfi-Sculpt refers to objects, which has been [http://www.twi.co.uk/j32k/unprotected/band_1/surfi-sculpt_index.html shaped using a power beam]). |

{|align="center" | {|align="center" | ||

|- | |- | ||

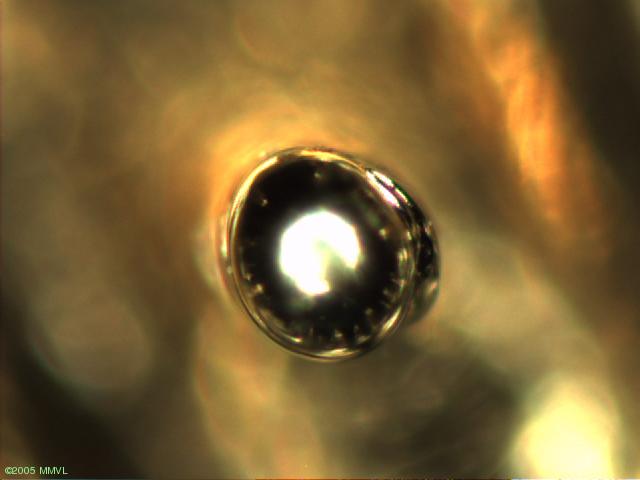

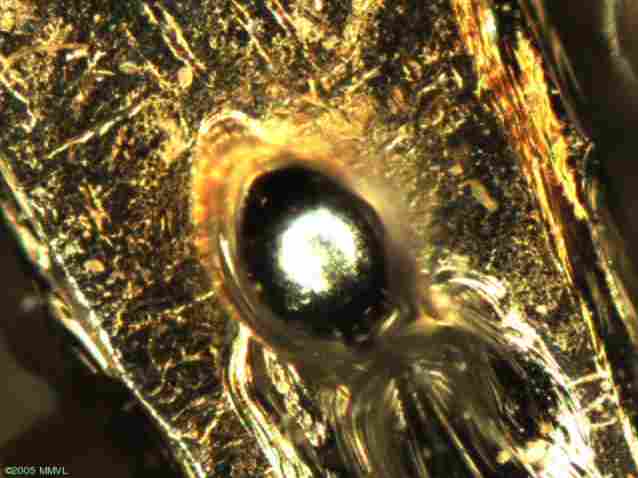

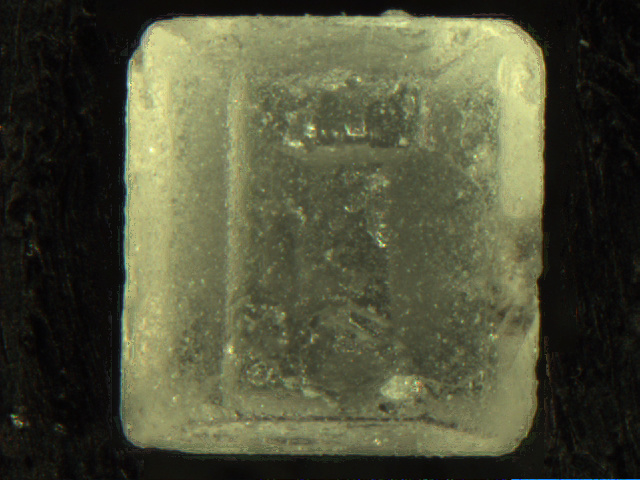

| − | |[[Image:grid_0055.jpg|thumb| | + | |[[Image:grid_0055.jpg|thumb|180px|First surfi-sculpt object, Leica DM LAM]]||[[Image:wheel_0136.jpg|thumb|180px|Second surfi-sculpt object, Leica DM LAM]]||[[Image:BrokenSuevit1_001500.png|thumb|180px|Piece of Suevit (enamel like material from meteorite impact) from the [http://en.wikipedia.org/wiki/Ries Nördlinger Ries], Leica DM RXA]]||[[Image:Hair20.png|thumb|180px|Micro-camera image of a hair on top of a laser printout]]||[[Image:Coin063.jpg|thumb|180px|Surface of 10-pence coin, Leica DM LAM]] |

|- | |- | ||

|} | |} | ||

| Line 28: | Line 23: | ||

{|align="center" | {|align="center" | ||

|- | |- | ||

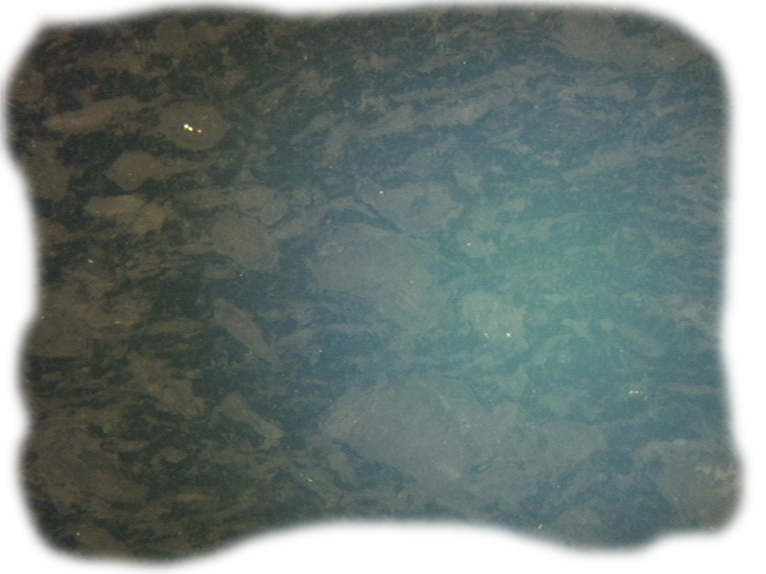

| − | |[[Image:griddv.jpg|thumb| | + | |[[Image:griddv.jpg|thumb|180px|Extended depth of view for first object]]||[[Image:wheeldv.jpg|thumb|180px|Extended depth of view for second object]]||[[Image:SuevitDV.png|thumb|180px|Extended depth of view for Suevit (fringes have been removed manually)]]||[[Image:HairDV.png|thumb|180px|Extended depth of view for the hair]]||[[Image:Dvcoin.png|thumb|180px|Extended depth-of-field image showing part of the 10-pence coin (compare with 1.39 MByte [http://vision.eng.shu.ac.uk/jan/coin.avi video of focus stack])]] |

|- | |- | ||

|} | |} | ||

| Line 35: | Line 30: | ||

{|align="center" | {|align="center" | ||

|- | |- | ||

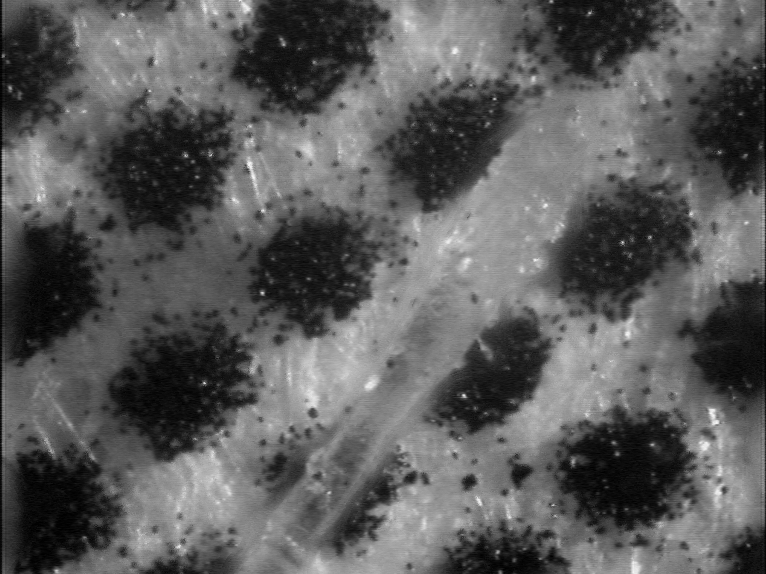

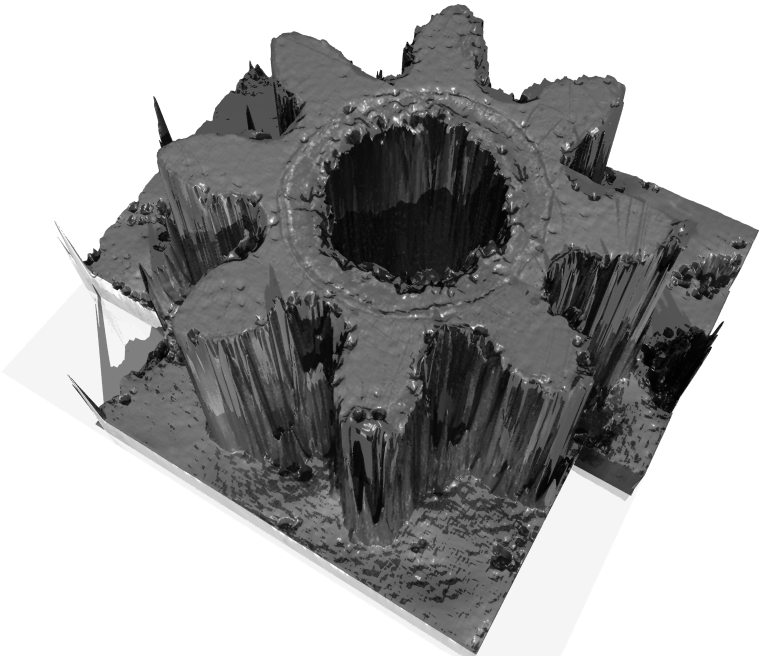

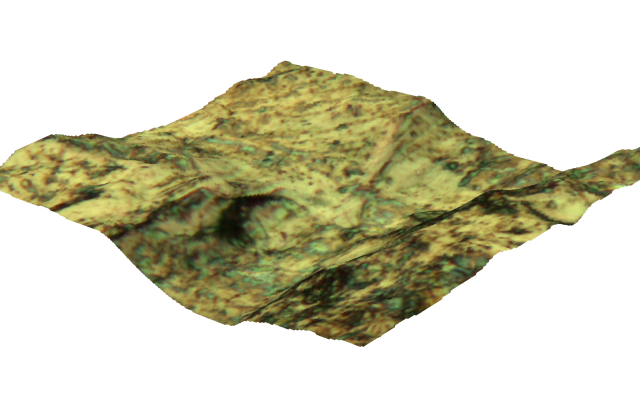

| − | |[[Image:grid1080.jpg|thumb| | + | |[[Image:grid1080.jpg|thumb|180px|3D reconstruction of first object [http://vision.eng.shu.ac.uk/jan/grid1.avi (742kB video)]]]||[[Image:wheel1008.jpg|thumb|180px|3D reconstruction of second object [http://vision.eng.shu.ac.uk/jan/wheel1.avi (725kB video)]]]||[[Image:suevit20.png||thumb|180px|3D reconstruction of suevit [http://vision.eng.shu.ac.uk/jan/suevit.mpg (1.4MB video)]]]||[[Image:Hair.png|thumb|180px|3D reconstruction of hair [http://vision.eng.shu.ac.uk/jan/hair.mpg (1.4MB video)]]]||[[Image:Coin.png|thumb|180px|3D reconstruction of 10-pence coin surface. The profile is amplified 5 times [http://vision.eng.shu.ac.uk/jan/coin3D.avi (1.0 MByte video)]]] |

|- | |- | ||

|} | |} | ||

| Line 41: | Line 36: | ||

{|align="center" | {|align="center" | ||

|- | |- | ||

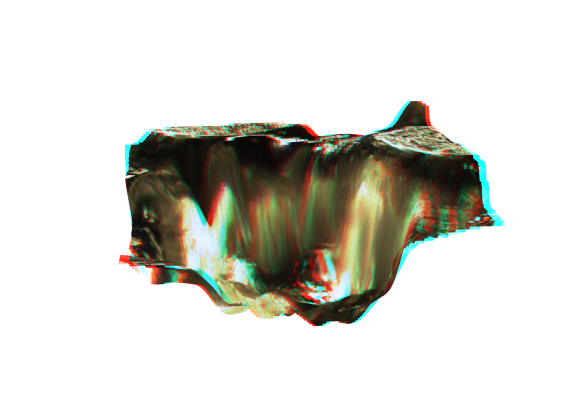

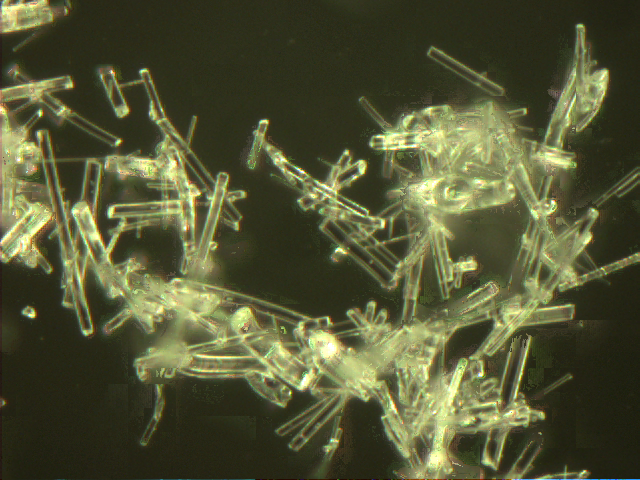

| − | |[[Image:Grid245.jpg|thumb| | + | |[[Image:Grid245.jpg|thumb|180px|Red-cyan anaglyph image of first object [http://vision.eng.shu.ac.uk/jan/grid.avi (2.0MB video)]]]||[[Image:Sugar.jpg|thumb|180px|Extended depth of field image for a piece of sugar (compare with 532 kByte [http://vision.eng.shu.ac.uk/jan/sugarstack.avi video of focus stack])]]||[[Image:Fiberdf10xdv.png|thumb|180px|Extended depth of field image of glass fibers (compare with 1.09 MByte [http://vision.eng.shu.ac.uk/jan/fiberdf10x.avi video of focus stack])]]||[[Image:SmallWheel.png|thumb|180px|Reconstruction of ''0.6 mm'' cogwheel using multiple focus sets]]||[[Image:Icing.png|thumb|180px|Icing of a cake as seen under the microscope]] |

| + | |- | ||

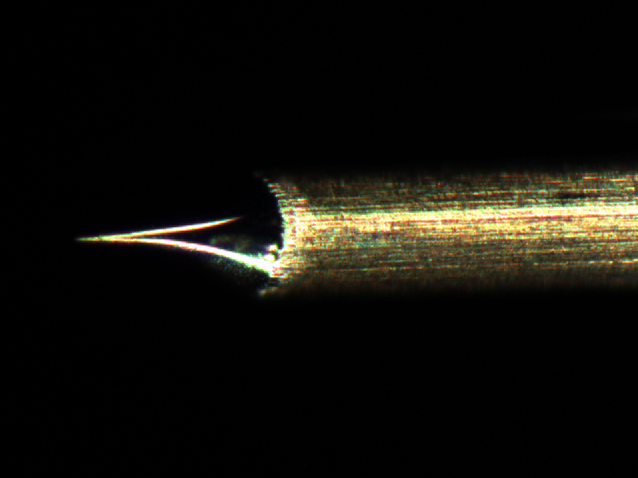

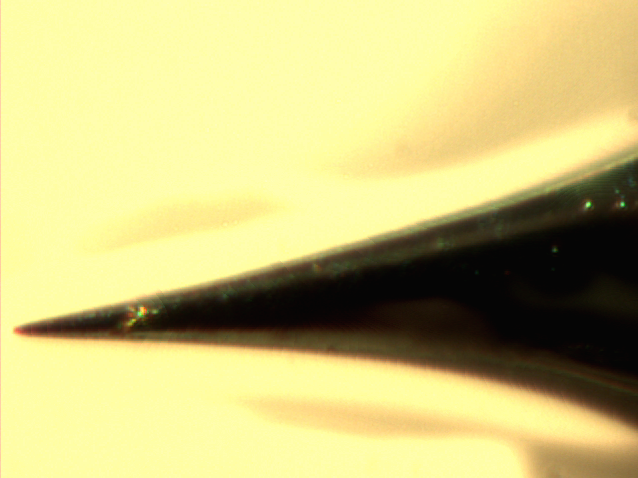

| + | |[[Image:dvtungsten3.png|thumb|180px|Optical microscope image of tungsten tip]]||[[Image:dvtungsten6.png|thumb|180px|Optical image of tungsten tip (high magnification)]]|||||| | ||

|- | |- | ||

|} | |} | ||

| − | As the idea for the algorithm was fixed already, it was possible to implement the algorithm as a command-line tool in less than 4 days, using existing [[Mimas]]-software (exspecially the [http://vision.eng.shu.ac.uk/mimas | + | As the idea for the algorithm was fixed already, it was possible to implement the algorithm as a command-line tool in less than 4 days, using existing [[Mimas]]-software (exspecially the [http://vision.eng.shu.ac.uk/jan/mimas/docs/arrayOperators.html operators for boost::multi_array]). |

As this is a "quick hack", there's still lots of space for improvements. | As this is a "quick hack", there's still lots of space for improvements. | ||

==Download== | ==Download== | ||

| − | + | ===Old C++ Implementation=== | |

| + | The software for estimating height-maps and images with extended depth-of-field is available for free (under the LGPL)! You first need to install [[Mimas_Announcement_Archive#Mimas-2.0|version 2.0]] of the [[Image:Mimasanim.gif|40px]] [[Mimas|Mimas Real-Time Computer Vision Library]] to be able to compile and run [http://vision.eng.shu.ac.uk/jan/depthoffocus-0.1.tar.bz2 depthoffocus-0.1] (652 kByte). The software also comes with sample files to generate photo-realistic 3D-reconstruction using [http://www.povray.org/ POVRay]! | ||

| + | ===New Ruby Implementation=== | ||

| + | [[Image:New.gif]] I've ported the program to [http://www.ruby-lang.org/ Ruby]. The new implementation is based on the [[Image:Hornetseye.png|40px]] [[HornetsEye]] Ruby-extension. It also requires [http://trollop.rubyforge.org/ TrollOp]. You can copy-and-paste the source code from the '''[http://www.wedesoft.demon.co.uk/hornetseye-api/files/stack-txt.html depth from focus example]''' page. The source code also comes with the [[HornetsEye]] source package. | ||

| + | |||

| + | =See Also= | ||

| + | * [[MiCRoN]] | ||

| + | |||

| + | =External Links= | ||

| + | * Publications | ||

| + | ** M. Boissenin, J. Wedekind, A.N. Selvan, B.P. Amavasai, F. Caparrelli, J.R. Travis: [http://dx.doi.org/10.1016/j.imavis.2006.03.009 Computer vision methods for optical microscopes] | ||

| + | ** J. Wedekind: [http://vision.eng.shu.ac.uk/jan/MechRob-paper.pdf Focus set based reconstruction of micro-objects] | ||

| + | * Further Reading | ||

| + | ** [http://en.wikipedia.org/wiki/Anaglyph_image Wikipedia article on anaglyph images] | ||

| + | ** Ishita De, Bhabatosh Chanda, Buddhajyoti Chattopadhyay: [http://dx.doi.org/10.1016/j.imavis.2006.04.005 Enhancing effective depth-of-field by image fusion using mathematical morphology] | ||

| + | * Focus stitching software [http://www.hadleyweb.pwp.blueyonder.co.uk/ CombineZ5] and some [http://www.janrik.net/insects/ExtendedDOF/LepSocNewsFinal/EDOF_NewsLepSoc_2005summer.htm impressive examples by Rik Littlefield]. | ||

| + | * [http://hirise.lpl.arizona.edu/anaglyph/index.php NASA anaglyph images of Mars] | ||

| + | * [http://www.youtube.com/results?search_query=yt3d%3Aenable%3Dtrue 3D videos on Youtube] | ||

| + | * [http://www.heliconsoft.com/ Helicon] proprietary focus stitching software | ||

| + | |||

| + | {{Addthis}} | ||

| − | + | [[Category:Projects]] | |

| − | + | [[Category:Micron]] | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | [[Category: | + | |

Latest revision as of 18:00, 17 March 2010

Contents |

[edit] Depth from Focus

[edit] 3D surface metrology

- You need to grab the focus stack with your microscope and digital camera.

- The results will be even better, if the illumination optics of the microscope can project a pattern.

- Non-destructive measurement of surface profiles

- With our experimental settings we observed (of course the result will depend on the quality of the microscope)

- Vertical resolution <math>\ge 0.2\ \mu m</math> (depending on aperture-size, magnification, projection-pattern and the surface properties of the object).

- Lateral resolution <math>\ge 2\ \mu m</math> (depends).

- Open Source (you are free to improve the code yourself if you redistribute it).

In general one can say: The lower the depth of field, the higher the resolution of the reconstruction. With high magnification (assuming constant numerical aperture) the resolution of the reconstruction goes up. The trade-off is that the reconstruction will cover a smaller area. This can be overcome by lateral stitching (e.g. cogwheel below).

[edit] Demonstration

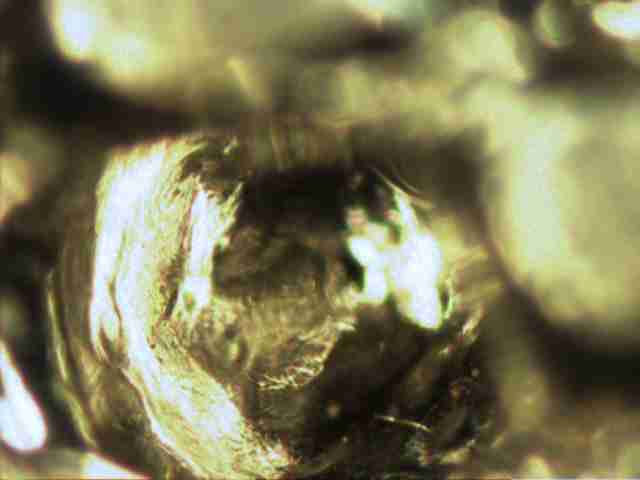

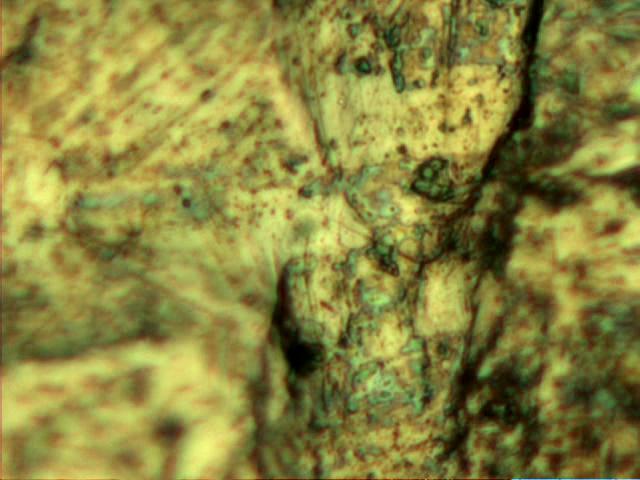

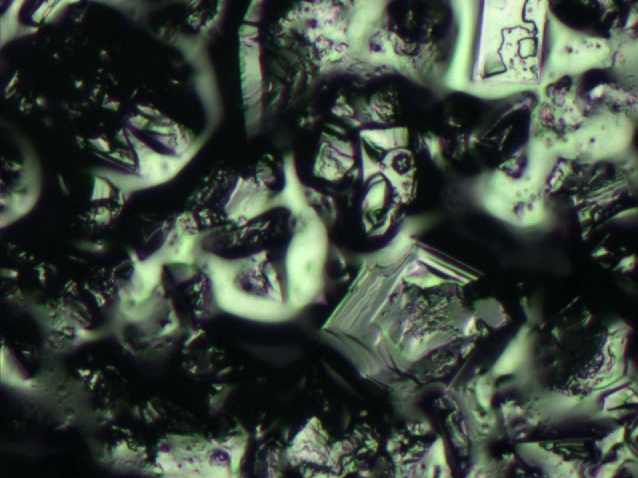

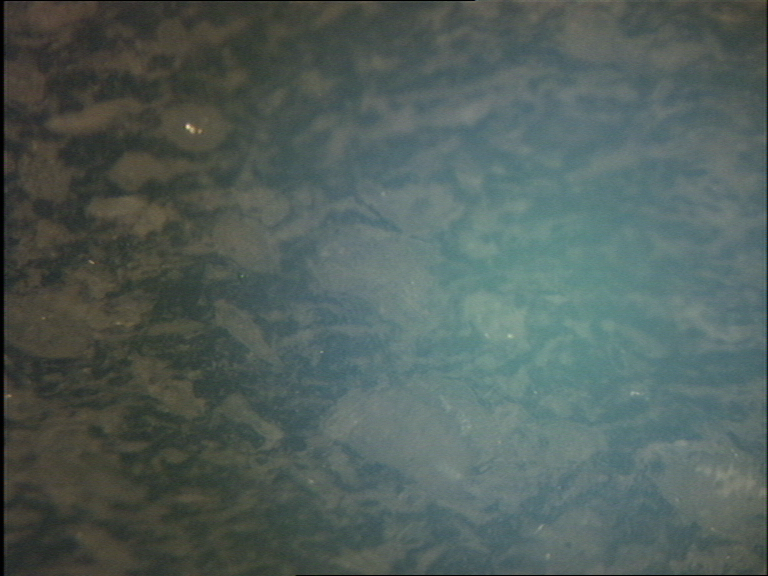

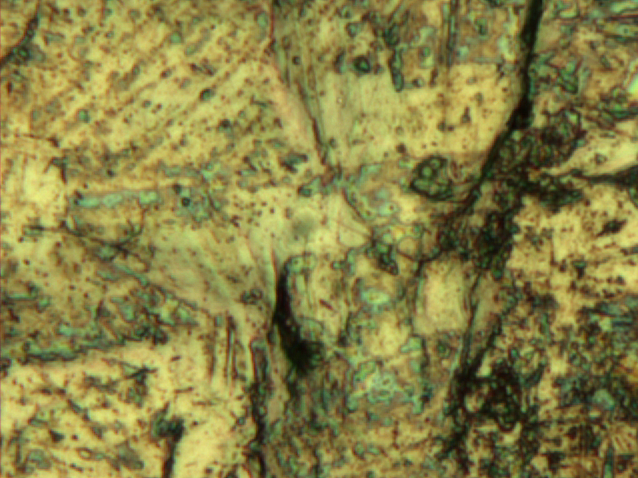

Here are some typical microscope images (Surfi-Sculpt refers to objects, which has been shaped using a power beam).

Piece of Suevit (enamel like material from meteorite impact) from the Nördlinger Ries, Leica DM RXA |

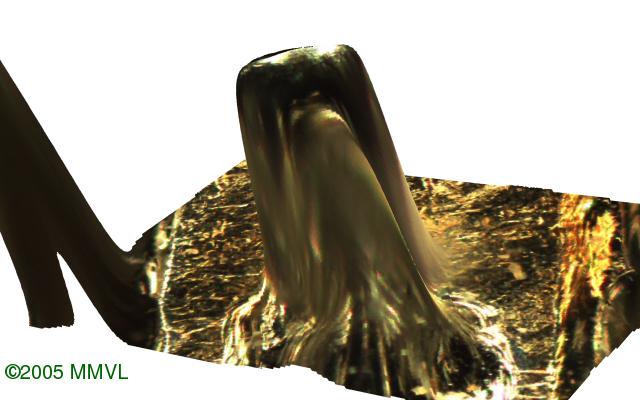

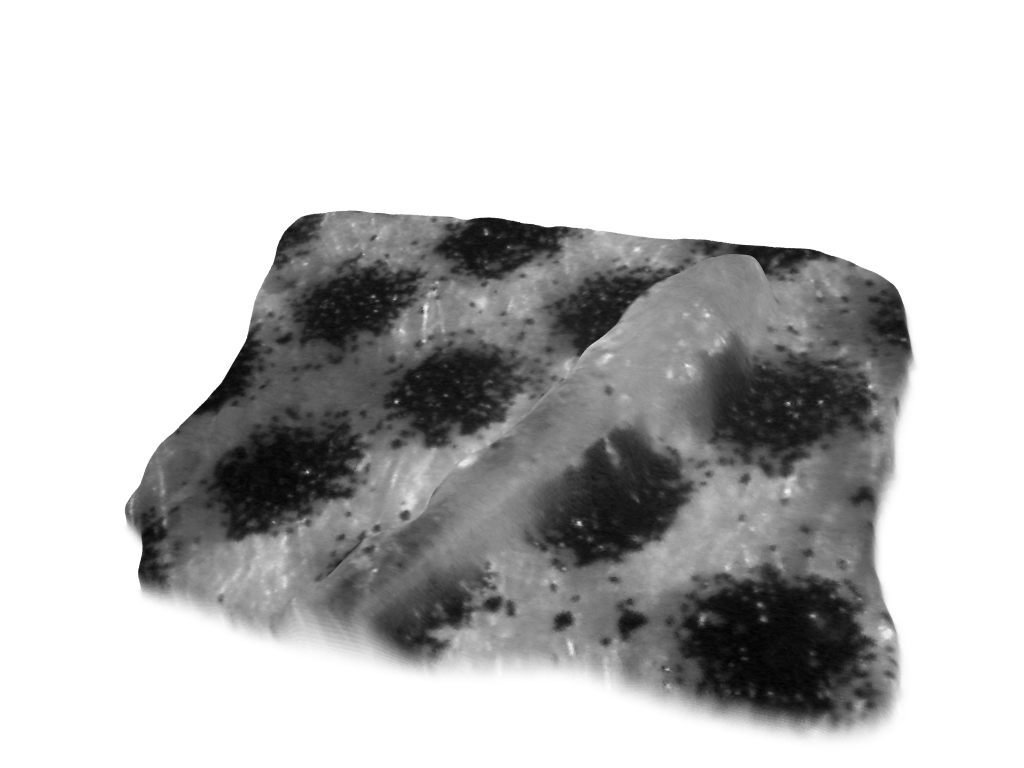

Using a focus-stack one can compute images with extended depth of focus:

Extended depth-of-field image showing part of the 10-pence coin (compare with 1.39 MByte video of focus stack) |

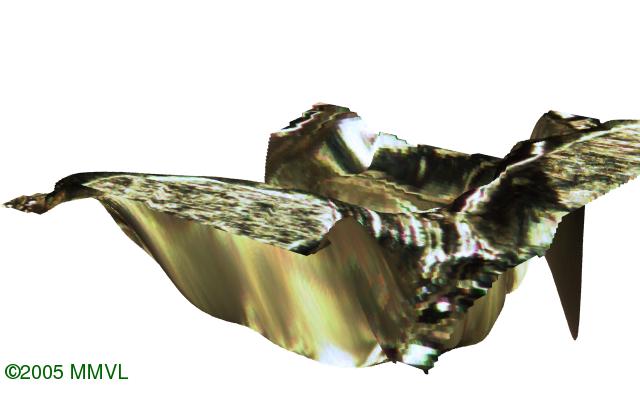

If the surface can be illuminated properly, one can even do a 3D-reconstruction of the surface:

3D reconstruction of first object (742kB video) |

3D reconstruction of second object (725kB video) |

[[Image:suevit20.png | 180px|3D reconstruction of suevit (1.4MB video)]] |  3D reconstruction of hair (1.4MB video) |

3D reconstruction of 10-pence coin surface. The profile is amplified 5 times (1.0 MByte video) |

Red-cyan anaglyph image of first object (2.0MB video) |

Extended depth of field image for a piece of sugar (compare with 532 kByte video of focus stack) |

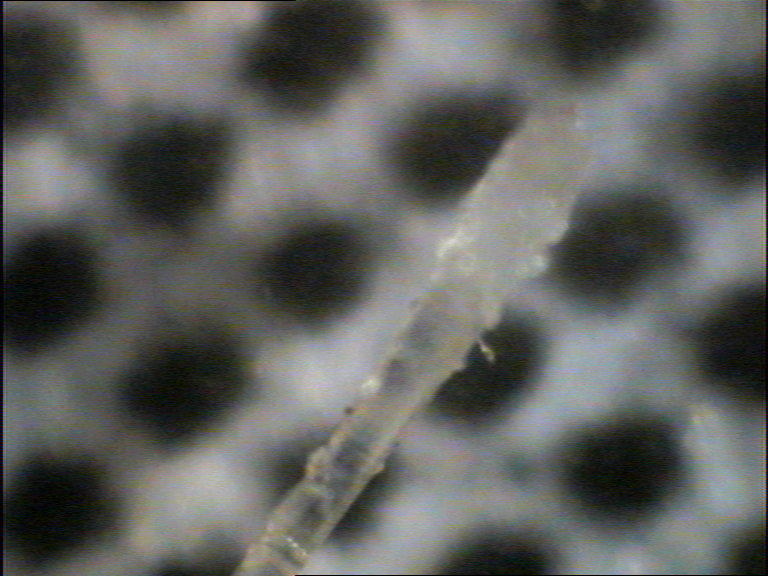

Extended depth of field image of glass fibers (compare with 1.09 MByte video of focus stack) |

||

As the idea for the algorithm was fixed already, it was possible to implement the algorithm as a command-line tool in less than 4 days, using existing Mimas-software (exspecially the operators for boost::multi_array).

As this is a "quick hack", there's still lots of space for improvements.

[edit] Download

[edit] Old C++ Implementation

The software for estimating height-maps and images with extended depth-of-field is available for free (under the LGPL)! You first need to install version 2.0 of the ![]() Mimas Real-Time Computer Vision Library to be able to compile and run depthoffocus-0.1 (652 kByte). The software also comes with sample files to generate photo-realistic 3D-reconstruction using POVRay!

Mimas Real-Time Computer Vision Library to be able to compile and run depthoffocus-0.1 (652 kByte). The software also comes with sample files to generate photo-realistic 3D-reconstruction using POVRay!

[edit] New Ruby Implementation

![]() I've ported the program to Ruby. The new implementation is based on the

I've ported the program to Ruby. The new implementation is based on the ![]() HornetsEye Ruby-extension. It also requires TrollOp. You can copy-and-paste the source code from the depth from focus example page. The source code also comes with the HornetsEye source package.

HornetsEye Ruby-extension. It also requires TrollOp. You can copy-and-paste the source code from the depth from focus example page. The source code also comes with the HornetsEye source package.

[edit] See Also

[edit] External Links

- Publications

- M. Boissenin, J. Wedekind, A.N. Selvan, B.P. Amavasai, F. Caparrelli, J.R. Travis: Computer vision methods for optical microscopes

- J. Wedekind: Focus set based reconstruction of micro-objects

- Further Reading

- Wikipedia article on anaglyph images

- Ishita De, Bhabatosh Chanda, Buddhajyoti Chattopadhyay: Enhancing effective depth-of-field by image fusion using mathematical morphology

- Focus stitching software CombineZ5 and some impressive examples by Rik Littlefield.

- NASA anaglyph images of Mars

- 3D videos on Youtube

- Helicon proprietary focus stitching software