VIEW-FINDER

|

A prototype of the ViewFinder SLAM procedure based on an SIR-RB particle filter implementation: corridor at the ALCOR Lab, Sapienza University of Rome. Ladar and odometry data collected via a Pioneer robot using the URG-04LX laser range finder and Player software platform.

|

|

Nitrogen gas evolution in a room-fire scenario (simulated with NIST's FDS-SMV)

|

Contents |

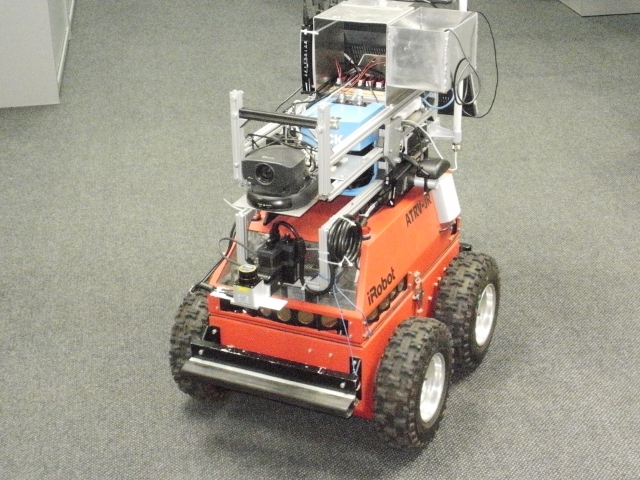

The VIEW-FINDER project

Official VIEW-FINDER page: "Vision and Chemi-resistor Equipped Web-connected Finding Robots"

In the event of an emergency, due to a fire or other crisis, a necessary but time consuming pre-requisite, that could delay the real rescue operation, is to establish whether the ground can be entered safely by human emergency workers. The objective of the VIEW-FINDER project is to develop robots which have the primary task of gathering data. The robots are equipped with sensors that detect the presence of chemicals and, in parallel, stereo/mono image and ladar data are collected and forwarded to an advanced base station. The project comprises of two scenarios: indoor and outdoor.

Objective

|

The VIEW-FINDER project is a European-Union, Framework-VI funded program (project no: 045541).

The VIEW-FINDER project is an Advanced Robotics project, consisting of 9 European partners, including South Yorkshire Fire and Rescue Services, that seeks to use a semi-autonomous robotic system to establish ground safety in the event of a fire. Its primary aim is to gather data (visual and chemical) to assist fire rescue personnel after a disaster has occured. A base station will combine gathered information with information retrieved from the large scale GMES-information bases.

This project will address key issues related to 2.5D map building, localisation and reconstruction; interfacing local command information with external sources; autonomous robot navigation and human-robot interfaces (base-station). Partners SHU, UoR, IES and GA are pre-dominantly involved in the indoor scenario and RMA, DUTH predominately involved in the outdoor scenario.

The developed VIEW-FINDER system will be a complete semi-autonomous system; the individual robot-sensors operate autonomously within the limits of the task assigned to them, that is, they will autonomously navigate through and inspect an area. Central operations Control (CoC) will assign tasks to the robots and just monitor their execution. However, Central operations Control has the means to renew task assignments or detail tasks of the ground robot. System-human interactions at the CoC will be facilitated through multi modal interfaces, in which graphical displays play an important but not exclusive role.

Although the robots are basically able to operate autonomously, human operators will be enabled to monitor their operations and send high level task requests as well as low level commands through the interface to some nodes of the ground system. The human-computer interface (base station) has to ensure that a human supervisor and human interveners on the ground, are provided with a reduced yet relevant overview of the area under investigation including the robots and human rescue workers therein.

The project is coordinated by the Materials and Engineering Research Institute at Sheffield Hallam University and will officially end in November 2009.

Partners

Coodinator

- Sheffield Hallam University, Materials and Engineering Research Institute (MERI, MMVL), Sheffield, United Kingdom

Academic Research Partners

- Royal Military Academy - Patrimony, Belgium

- Democritus University of Thrace - Xanthi, Greece

- Sapienza University of Rome, Italy

- Industrial Research Institute for Automation and Measurements - PIAP, Poland

Industrial partners

- Space Applications Services, Belgium

- Intelligence for Environment and Security SRL - IES Solutions SRL, Italy

- South Yorkshire Fire and Rescue Service, United Kingdom

- Galileo Avionica -S.P.A., Italy

See Also

- EURON/IARP workshop, Jan 2008

-

RISE 2010 Conference - January 20-21 2010, Sheffield Hallam University. Call, and further details available to download here.

RISE 2010 Conference - January 20-21 2010, Sheffield Hallam University. Call, and further details available to download here.

External Links

- Publicity

- System architecture/integration

- Mapping and Localisation

- Sources of Inspiration - Robotics