Nanorobotics

Contents |

[edit] The Nanorobotics Project

|

|

A large new nanotechnology research programme Nanorobotics - technologies for simultaneous multidimensional imaging and manipulation of nanoobjects (grant GR/S85696/01 and grant GR/S85689/01) is to be established at Sheffield University from Autumn 2004 funded by a £2.3Millon grant from the RCUK Basic Technology Research Programme. The programme led by the Engineering Materials Department of the University of Sheffield, will be a collaboration of project partners from Sheffield (project leader), Sheffield Hallam and Nottingham.

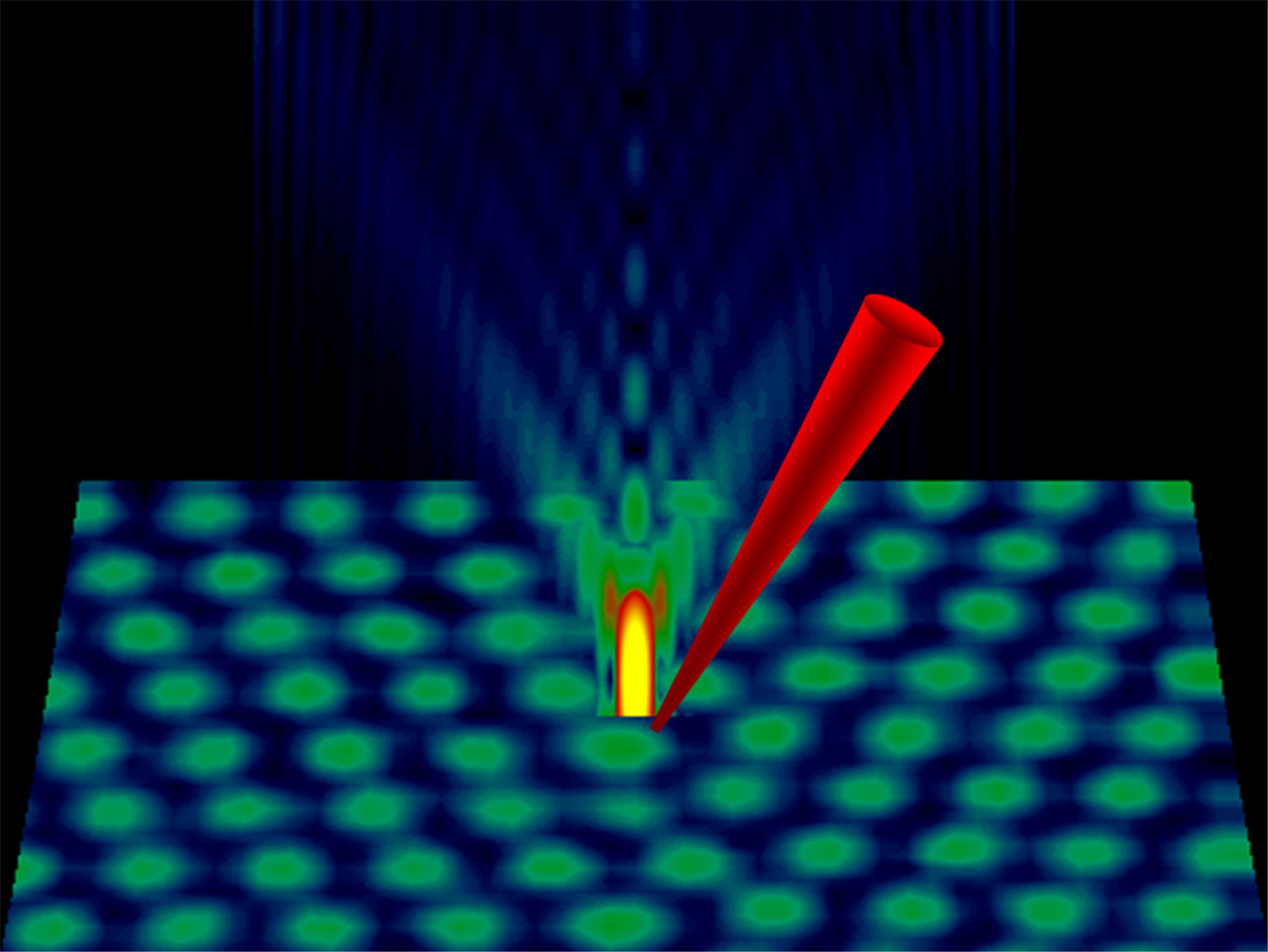

Many new nanotechnology research fields require a high degree of precision in both observing and manipulating materials at the atomic level. The advanced nanorobotics technology needed to manipulate materials at this scale, a million times smaller than a grain of sand, will be developed in the new Sheffield Nanorobotics group. The integration of different technologies to act as simultaneous real-time nanoscale "eyes" and "hands", including the advanced nanorobotics, high-resolution ion/electron microscopy, image processing/vision control and sophisticated sensors, will lead to the ability to manipulate matter at the scale of atoms or molecules.

The Nanorobotics programme will thus allow unique experiments to be carried out on the manipulation and observation of the smallest quantities of materials, including research into nanoscale electronic, magnetic and electromechanical devices, manipulation of fullerenes and nanoparticles, nanoscale friction and wear, biomaterials, and systems for carrying out quantum information processing.

The project's deadline is end of July 2009.

[edit] Workpackage 5: Intelligent nanorobotic Control

|

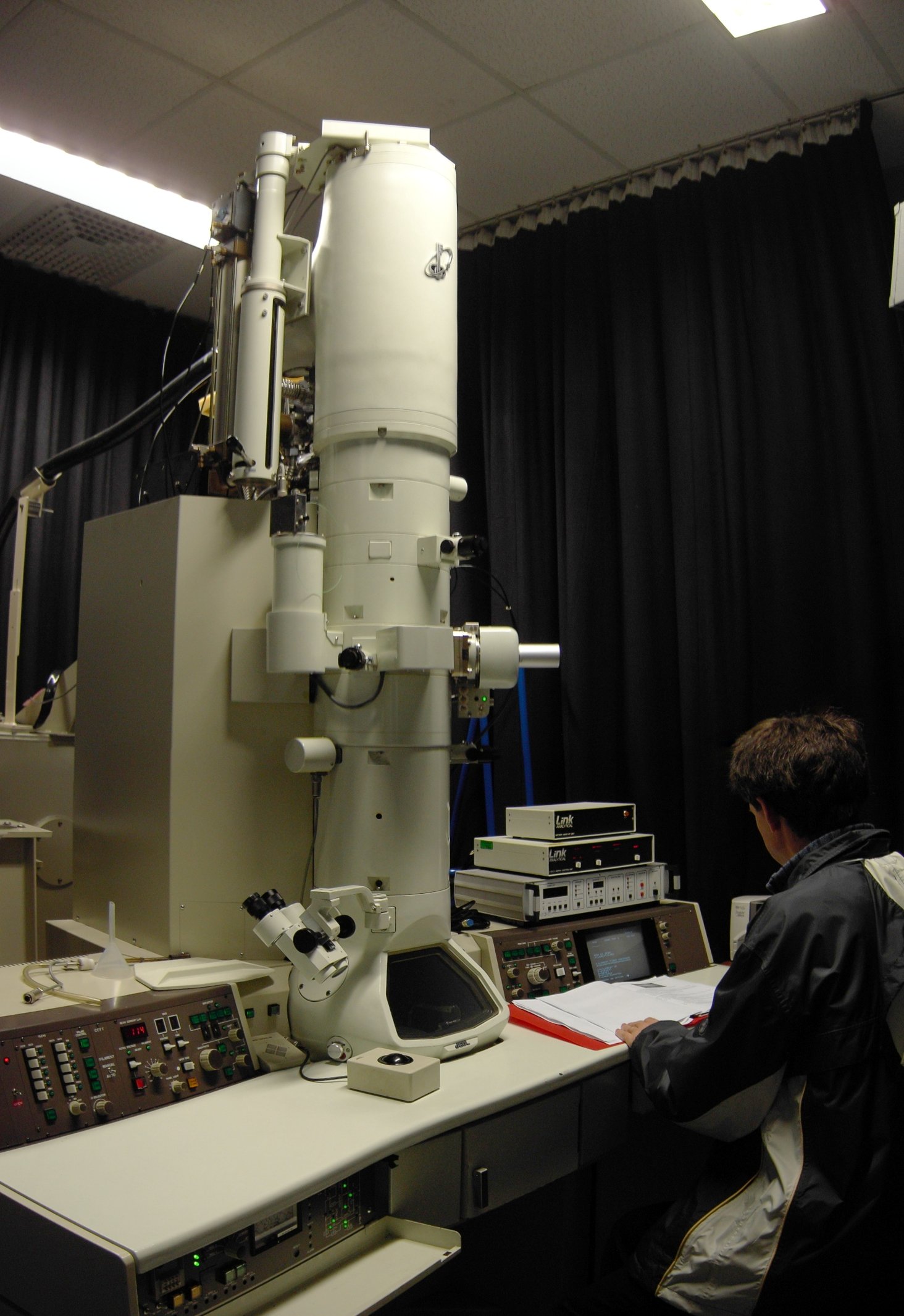

Visual calibration followed by two-dimensional telemanipulation (also available as MPEG4 video (45 MByte)). The deviation may be due to the drift during calibration which was not taken into account. This video was created with free TEM software running under GNU/Linux. More ... |

|

Lucas-Kanade tracker applied to high magnification video and low magnification video. The indenter is lost where it moves to fast for the tracking algorithm. More ... |

The workpackage of the MMVL is to

- provide real-time position feed-back for controlling the nano-indenter

- using input from S-Video- or alternatively Firewire-camera

- estimate x- and y-position of the tip

- estimate the depth of the tip using x- or y-image wobbling (this is a common technique for manually focussing on an object already)

- User-interface for telemanipulation and automated tasks

- Correlate AFM- with TEM-images

Further projects beyond the workpackage

- assist in hardness measurements by applying optic flow estimation on TEM videos

- offline measurements of video-data from indentation experiments (e.g. see this publication)

- estimation of drift

- estimate movement of tip

- determine object-boundaries

- measure fix-point and accuracy of rotary drives

[edit] Project Outputs

[edit] Publications

[edit] Conference papers

- A. J. Lockwood, J. Wedekind, R. S. Gay, M. S. Bobji, B. Amavasai, M. Howarth, G. Möbus, B. J. Inkson: Advanced transmission electron microscope triboprobe with automated closed-loop nanopositioning, Measurement Science and Technology, Volume 21 Number 7

- M.Boissenin, J.Wedekind, B.P.Amavasai, J.R.Travis and F.Caparrelli. Fast pose estimation for microscope images using stencils. Proceedings of the 2006 IEEE SMC UK-RI 5th Chapter Conference on Advances in Cybernetic Systems (AICS 2006), Sheffield Hallam University, UK, 7-8 Sept 2006.

- D. Bhowmik, B.P. Amavasai, T.J. Mulroy, Real-Time Object Classification On FPGA Using Moment Invariants And Kohonen Neural Networks,Proc. IEEE SMC UK-RI 5th Chapt. Conf. Advances in Cybernetic Systems (AICS 2006), pp. 43-48 (2006)

- M. Boissenin, B.P. Amavasai, J. Wedekind and R. Saatchi, Shape information: using state space to decimate templates. BMVA Symposium on Shape Representation, Analysis and Perception, London, 5 November 2007.

- J. Wedekind, B. P. Amavasai, K. Dutton, Steerable filters generated with the hypercomplex dual-tree wavelet transform. 2007 IEEE International Conference on Signal Processing and Communications (ICSPC), pp. 1291-1294, Dubai, United Arab Emirates.(2007)

- B.P. Amavasai, J. Wedekind, M. Boissenin, F. Caparrelli, A. Selvan, Machine Vision for macro, micro, and nano robot environments. BMVA Symposium on Robotics and Vision, London, 14 May 2008.

- J. Wedekind, B. P. Amavasai, K. Dutton, M. Boissenin, A machine vision extension for the Ruby programming language. 2008 International Conference on Information and Automation (ICIA), pp. 991-6, Zhangjiajie, China.(2008)

[edit] Other

- M. Boissenin: Template Reduction of Feature Point Models for Rigid Objects and Application to Tracking in Microscope Images, PhD, Feb 2009, Sheffield Hallam University

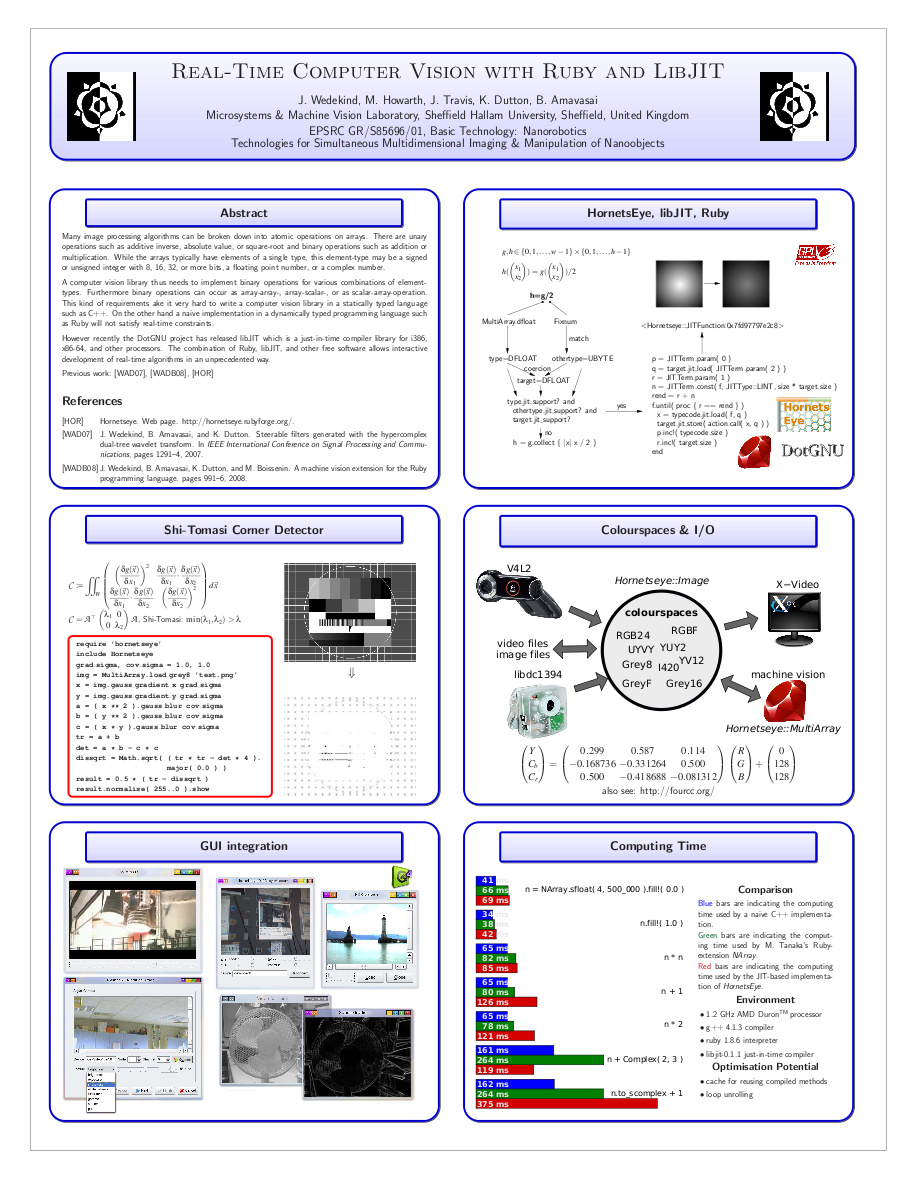

- J. Wedekind, M. Howarth, J. Travis, K. Dutton, B. Amavasai, Real-time computer vision with Ruby and libJIT. Poster presentation, The 13th AVA Christmas Meeting, Bristol, United Kingdom (2008)

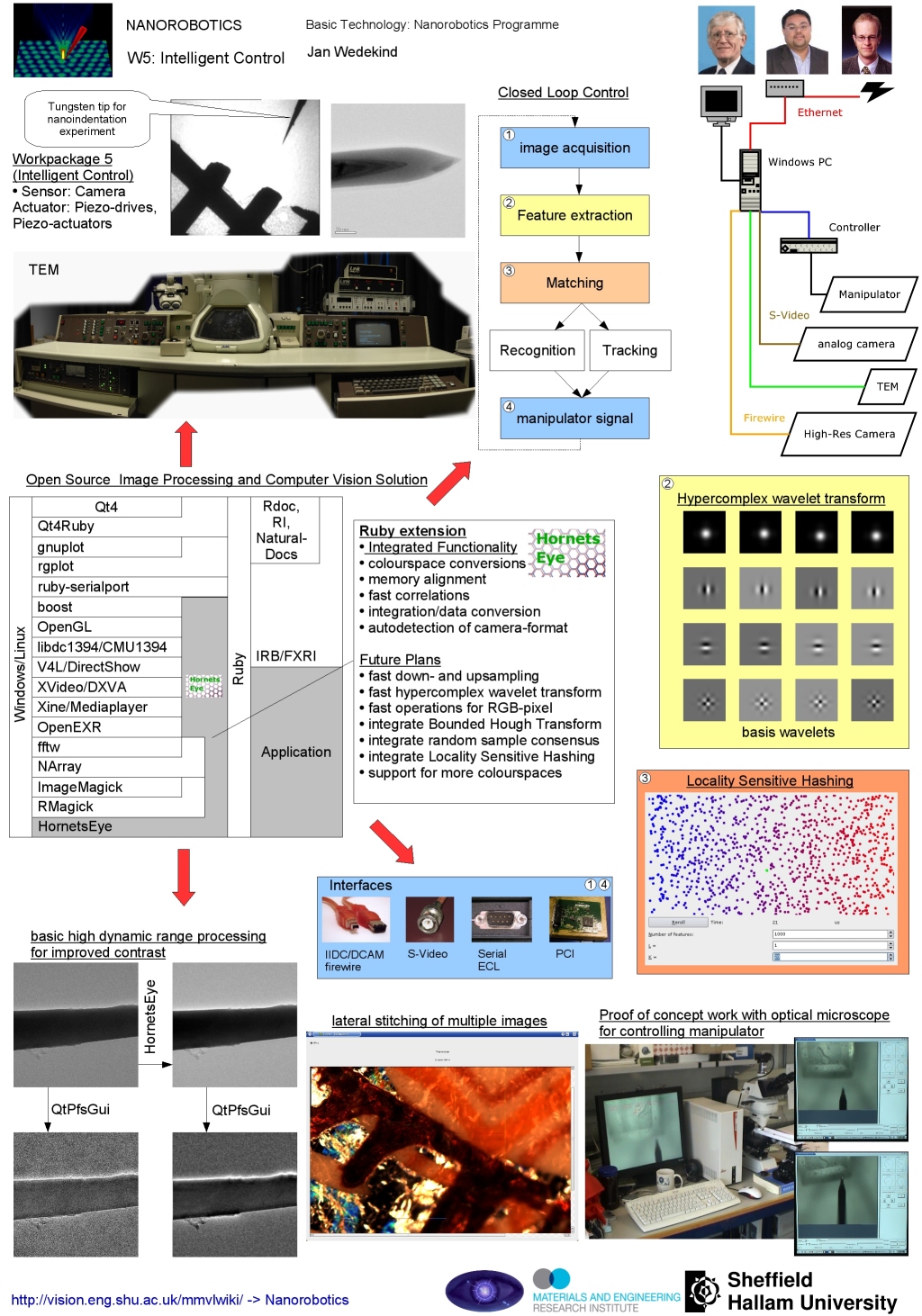

- J.Wedekind, B. Amavasai, J.Travis: Nanorobotics workshop poster presentation, Nanorobotics workshop, Sheffield, United Kingdom (2007)

- B.P. Amavasai: Machine vision for macro, micro and nano robot environments at the BMVA meeting on Vision and Robotics (also see list of BMVA meetings)

- anonymous submission(s)

[edit] Software

-

TEM vision software

TEM vision software

- software for programmed movements nano-indenter

- software for telemanipulation (controlling nanoindenter with camera feedback)

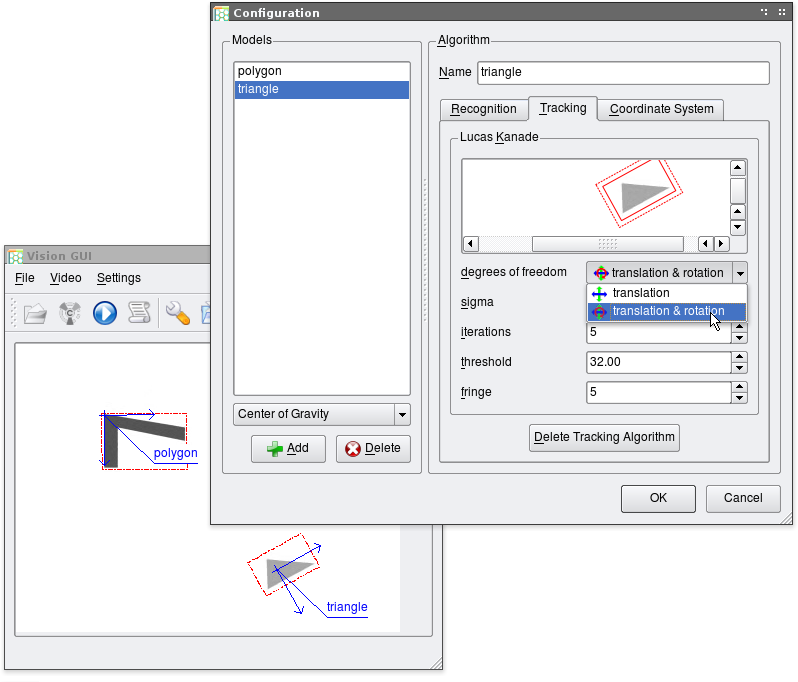

- vision software with plugins for 2D recognition and tracking

-

HornetsEye Ruby-extension

HornetsEye Ruby-extension

- integration of video-I/O libraries

- fast generic array operations

[edit] See Also

-

HornetsEye

HornetsEye

-

Mimas

Mimas

- MiCRoN

- MINIMAN

- Registration of TEM images

- Micro-manipulator

- Hypercomplex Wavelets

- Lucas-Kanade tracker

- BMVA2008-Talk

[edit] External Links

- Project Partners

- Sheffield Hallam University

- University of Sheffield

- Nanorobotics group at Sheffield University (project leader)

- Project announcement

- University of Sheffield Engineering Faculty FEGTEM

- Publication Indentation mechanics of Cu-Be quantified by an in situ transmission electron microscopy mechanical probe

- University of Nottingham

- Journals

- Wikibooks' Nanowiki

- JEOL 3010

- Links999 Nanotech Page

- CAVIAR: Image-based recognition project

- TEM

- Nanorobotic Manipulation System at the Fukuda Lab

- Micro Stereo Litography

- An Introduction to Intelligent and Autonomous Control

- Fabry-Perot interferometer

- Overview of fiber-optical sensors

- Tomography with electron microscopes

- A to Z of nanotechnology

- Manufacturing and handling of micro-particles at UCLA

- What is nanopantography?

- molecular machines research group MIT media lab

- Microgripper at Toronto University

- Nano-imprinting to improve LEDs

- Efficiently Producing Quantum Dots

- Nanohand project

- Software

- Tomography with electron microscopes

- Nano-Hive Nanospace Simulator

- IMOD tomography software. (License doesn't allow redistribution of Source-code!)

- AMIRA 3D segmentation and visualisation (commercial software)

- CTSim: Computed Tomography Simulator

- Tomography at Queen Mary University of London

- Tomography demonstration at EPFL

- Gnome X Scanning Microscopy

- IMOD tomography software (proprietary license)

- SUGAR open source MEMS simulator

- EMAN Software for Single Particle Analysis and Electron Micrograph Analysis

- Nanotechnology in consumer products

- NASA Virtual Microscope